Feed Aggregator Page 691

Rendered on Sat, 26 Aug 2023 01:39:19 GMT

Rendered on Sat, 26 Aug 2023 01:39:19 GMT

via Elm - Latest posts by @pit Pit Capitain on Sat, 19 Aug 2023 20:34:17 GMT

Thank you for the article, Dwayne.

If you want to improve performance even more, you could use the following approach:

Parser.getOffset and Parser.getSource and then String.dropLeft to get the string that hasn’t been parsed yetParser module, for example via loops with String.unconsParser.token, giving it the parsed text as the tokenHow to handle the failure case depends upon which error you want to generate at which position. One solution would be to advance the parser to the failing position with Parser.token like in the success case, followed by a Parser.chompIf isGood.

If you don’t need the error to be reported at the exact first failing position, I found that you can improve performance even more for chompers with a given lower bound (chompAtLeast, chompBetween, and chompExactly) by using String.all isGood for the part up to the lower bound instead of a loop.

This is just a very brief description. I can send you a sample implementation, if you like.

Thank you again for the article and the code.

via Elm - Latest posts by @jfmengels Jeroen Engels on Sat, 19 Aug 2023 18:29:13 GMT

In my opinion, it depends a lot, and I guess I search for what makes the better experience rather than caring about big or small rules.

For instance, the NoUnused.Modules got “merged” into NoUnused.Exports because when they were separate, the result was a bit terrible. Since NoUnused.Exports autofixed errors (while NoUnused.Modules didn’t), if there was an unused module, NoUnused.Exports would remove every export one by one (and then NoUnused.Variables would remove every function one by one) until there was nothing to remove. This was annoying and extremely time-consuming. With the 2 merged and caring about each other’s situation, we now don’t autofix exports for modules that are never imported and we let the user remove the module manually. (Well, until we have an automatic fix at least).

For NoUnused.Variables, we could go either way: keep it as is or split it up. The main reason why I’m thinking of keeping it as one is because computing unused imports and unused variables is very much the same work, so separating them into two rules would mean the amount of work would be doubled (meaning it’s slower).

One reason to separate the two would be for instance if you wanted to disable one of two report types but not the other (in this case, likely the variables part). In practice, I find the NoUnused.Variables rarely suppressed or not enabled (not that I have very much data though).

Happy to discuss this more (and be convinced otherwise) but probably better done in a separate thread ![]()

via Elm - Latest posts by @rupert Rupert Smith on Sat, 19 Aug 2023 13:23:11 GMT

BTW - I think I prefer small rules to big ones, so its possible to check for unused modules or exports independantly. Are you generally prefering to merge things into more comprehensive rules, or does the NoUnused.Exports come with a set of flags to select what is actually checks? Not that you have to listen to me, but I thought I would express this preference as a suggestion anyway, see if others think the same or different.

Similar with the NoUnused.Variables rules which also checks for unused imports. I’d like to clean up imports without having to run the full NoUnused.Variables sometimes.

via Elm - Latest posts by @jfmengels Jeroen Engels on Sat, 19 Aug 2023 12:39:30 GMT

The idea behind my suggestion for using --report=json is that you run only the NoUnused.Modules (--rules NoUnused.Modules) rule and interpret the output from the tool without the use of --fix-all.

I don’t recommend you run other rules than this one until you want to get to the next step. Using other rules will just slow you down in your current objective. Once it’s done, turn on other rules one by one.

If you want to try applying fixes AND using --report=json, you can probably do that though I have never tried it. In this case, you can use --fix-all-without-prompt which won’t prompt you and should therefore work better.

For the elm-format error, I’m wondering which error caused an elm-format parsing failure. It would be great if you could try to provide an SSCCE if/when you have the time. You have 810 fixes right now so it’s hard to tell you which one caused it, but maybe it’s the NoExposingEverything one that creates a very long line, longer than supported (Elm 0.19.1 only supports lines that are less than 65536 characters long IIRC, and I wouldn’t be surprised if that’s causing). Happy to help you debug (I’m jfmengels on Slack, but there’s also a #elm-review channel there).

via Elm - Latest posts by @Birowsky on Sat, 19 Aug 2023 12:18:33 GMT

Hey Jeroen!

I did some basic setup, I ran this command:

elm-review --fix-all --ignore-dirs=src/scripts/Api,src/scripts/ElmFramework --ignore-files=src/scripts/AssetsImg.elm,src/scripts/AssetsSvg.elm,src/scripts/IconSvg.elm

it says that elm-format failed with “Unable to parse file”:

I also tried producing json output with:

elm-review --template jfmengels/elm-review-unused/example --fix-all --ignore-dirs 'src/scripts/ElmFramework','src/scripts/AWSWrapper' --report=json > out.json

But it does not produce JSON content inside the file. It’s instead a standard console output. With a prompt waiting for continuation.

Considering that I’m working in a proprietary repo, I won’t be able to share the project, but I’m open for a call, if you feel like. You can find me as birowsky on Slack.

I’ll now proceed with what mr. Lydell suggests.

via Elm - Latest posts by @jfmengels Jeroen Engels on Fri, 18 Aug 2023 19:25:25 GMT

Hi @Birowsky ![]()

elm-review can help you with this with the NoUnused.Modules rule, but it won’t delete the files directly, you will have to do that manually. So it depends with what you mean with manual labor is not a viable option (not doing any work manually or not spending time figuring out the unused modules).

You can try it out here:

elm-review --template jfmengels/elm-review-unused/example --rules NoUnused.Modules

I have started jotting down ideas on how to support automatic file suppressions in autofixes in elm-review, so it will come one day.

Until then, if you really want this to be automated with deletion, you can probably create a script where you take the output of elm-review --report=json and have a script read that and delete the files automatically (documentation for the format here). You might need to run it several times (or in watch mode) until there are no more errors, as deleting unused modules might uncover more unused modules.

@lydell’s approach should work as well, but you should make sure you don’t delete tests/ or delete modules that contain a main that you were not thinking of. That will be handled in the case of NoUnused.Modules.

I highly recommend you then look at the other rules from the same package, as that will likely uncover a lot more unused code or files.

PS: NoUnused.Modules is actually deprecated, you should use NoUnused.Exports instead, though that does slightly more than what you were asking for here.

Have fun deleting code! ![]()

via Elm - Latest posts by @lydell Simon Lydell on Fri, 18 Aug 2023 17:31:47 GMT

If your goal is to remove only unused modules (files), and doing so in an automated way, I’m not sure elm-review can help (but would love to be proved wrong)!

Someone else might have a much smarter and easier way, but I happen to have a script that finds all modules that are referenced from an entrypoint. Kind of.

So you could:

So about that script.

In elm-watch I need to compute all file paths that some given entrypoints depend on, to know which .elm files should trigger recompilation when they change.

To benchmark that, I created a little CLI script that takes entrypoints as input and finds all the files they depend on recursively. It only prints the duration and the number of files found though. But it’s easy to patch it to print all the file paths. Note: Some file paths might not exist (because reasons), but I don’t think it should matter for your case.

Here’s how to run it (more or less):

git clone git@github.com:lydell/elm-watch.gitcd elm-watchnpm cidiff --git a/scripts/BenchmarkImportWalker.ts b/scripts/BenchmarkImportWalker.ts

index 0427b96..458b6d7 100644

--- a/scripts/BenchmarkImportWalker.ts

+++ b/scripts/BenchmarkImportWalker.ts

@@ -107,7 +107,7 @@ function run(args: Array<string>): void {

console.timeEnd("Run");

switch (result.tag) {

case "Success":

- console.log("allRelatedElmFilePaths", result.allRelatedElmFilePaths.size);

+ console.log(Array.from(result.allRelatedElmFilePaths).join("\n"));

process.exit(0);

case "ImportWalkerFileSystemError":

console.error(result.error.message);

node -r esbuild-register scripts/BenchmarkImportWalker.ts path/to/Entrypoint.elm path/to/Second/Entrypoint.elmvia Elm - Latest posts by @Birowsky on Fri, 18 Aug 2023 16:09:02 GMT

Hey folks, my goal is to remove all modules which are not referenced starting from the entry module. There are thousands of modules in this project, and manual labor is not a viable option.

Does elm-review or anything else help with this?

via Elm - Latest posts by @dwayne Dwayne Crooks on Thu, 17 Aug 2023 14:08:27 GMT

Yes, in Haskell you can do it with a partial function. For e.g. if you know for sure you’re dealing with a non-empty list at some point in your application then you can use head without having to handle a Maybe result.

head :: [a] -> a

head (x:_) = x

via Elm - Latest posts by @benjamin-thomas Benjamin Thomas on Thu, 17 Aug 2023 05:18:41 GMT

Of course, it’s not a “big” problem I wouldn’t loose sleep over it either ![]()

I thought I’d post because maybe someone knows a trick or two I could learn about.

You say you don’t know a way around this in Elm, but presumably there is in another language?

I’m wondering because from the type system’s perspective, all makes sens, but we are left with something not ideal in the end.

fromSafeString looks like a useful convention, I like it.

I thought about it a bit more and I think we can also generalize the problem like this:

{-| `fromMaybe` assumes that applying `f` will never return `Nothing`.

If it does, the parsing fails.

-}

fromMaybe : (a -> Maybe b) -> a -> Parser b

fromMaybe f =

f

>> Maybe.map P.succeed

>> Maybe.withDefault (P.problem "Bug: f produced Nothing!")

decimal : Parser Float

decimal =

chompDecimal

|> P.getChompedString

|> P.andThen (fromMaybe String.toFloat)

Then I feel things are slightly better that way.

via Elm - Latest posts by @dwayne Dwayne Crooks on Thu, 17 Aug 2023 03:07:00 GMT

I understand your point and it would be nice if there was a way around it in Elm. But there isn’t so I don’t lose sleep over it.

When I was writing elm-natural I designed the API so that the Maybe.withDefault was hidden in cases where the user knew for certain they had valid input. See for e.g. fromSafeInt and fromSafeString.

So if you were to write a natural parser it could look like:

natural : Parser Natural

natural =

chompNatural

|> P.mapChompedString (\s () -> Natural.fromSafeString s)

|> lexeme

BTW, I like your decimal version better because in the off chance the conversion does fail you get a clear parser error (assuming you improve the problem message).

via Planet Lisp by on Thu, 17 Aug 2023 00:00:00 GMT

Catchy title, innit? I came up with it while trying to name the development style PAX enables. I wanted something vaguely self-explanatory in a straight out of a marketing department kind of way, with tendrils right into your unconscious. Documentation-driven development sounded just the thing, but it's already taken. Luckily, I came to realize that neither documentation nor any other single thing should drive development. Less luckily for the philosophically disinclined, this epiphany unleashed my inner Richard P. Gabriel. I reckon if there is a point to what follows, it's abstract enough to make it hard to tell.

In programming, there is always a formalization step involved: we must go from idea to code. Very rarely, we have a formal definition of the problem, but apart from purely theoretical exercises, formalization always involves a jump of faith. It's like math word problems: the translation from natural to formal language is out of the scope of formal methods.

We strive to shorten the jump by looking at the solution carefully from different angles (code, docs, specs), and by poking at it and observing its behaviour (tests, logs, input-output, debugging). These facets (descriptive or behavioural) of the solution are redundant with the code and each other. This redundancy is our main tool to shorten the jump. Ultimately, some faith will still be required, but the hope is that if a thing looks good from several angles and behaves well, then it's likely to be a good solution. Programming is empirical.

Tests, on the abstract level, have the same primary job as any other facet: constrain the solution by introducing redundancy. If automatic, they have useful properties: 1. they are cheap to run; 2. inconsistencies between code and tests are found automatically; 3. they exert pressure to keep the code easily testable (when tracking test coverage); 4. sometimes it's easiest to start with writing the tests. On the other hand, tests incur a maintenance cost (often small compared to the gains).

Unlike tests, documentation is mostly in natural language. This has the following considerable disadvantages: documentation is expensive to write and to check (must be read and compared to the implementation, which involves humans for a short while longer), consequently, it easily diverges from the code. It seems like the wrong kind of redundancy. On the positive side, 1. it is valuable for users (e.g. user manual) and also for the programmer to understand the intention; 2. it encourages easily explainable designs; 3. sometimes it's easiest to start with writing the documentation.

Like tests or any other facet, documentation is not always needed, it can drive the development process, or it can lag. But it is a tremendously useful tool to encourage clean design and keep the code comprehensible.

Writing and maintaining good documentation is costly, but the cost can vary greatly. Knuth's Literate Programming took the very opinionated stance of treating documentation of internals as the primary product, which is a great fit for certain types of problems. PAX is much more mellow. It does not require a complete overhaul of the development process or tooling; giving up interactive development would be too high a price. PAX is chiefly about reducing the distance between code and its documentation, so that they can be changed together. By doing so, it reduces the maintenance cost, improves both the design and the documentation, while making the code more comprehensible.

In summary,

Multiple, redundant facets are needed to have confidence in a solution.

Maintaining them has a cost.

This cost shapes the solution.

There is no universally good set of facets.

There need not be a primary facet to drive development.

We mentally switch between facets frequently.

Our tools should make working with multiple facets easier.

via Elm - Latest posts by @benjamin-thomas Benjamin Thomas on Wed, 16 Aug 2023 21:54:12 GMT

Interesting post.

I love parser combinators, I’ve learnt a bit about them via OCaml (just dabbling).

One thing I noticed is that it’s pretty much the only time where I’d make sens to me to actually introduce a runtime error, to make the program fail loud and clear (when something goes horribly horribly wrong).

Because it seems to me that some operations can’t be expressed correctly by the type system when parsing.

For instance, in your decimal function, you use Maybe.withDefault to satisfy the type system’s constraints. But that constraint is a little bit artificial, because you do know that only floats will come out of chompDecimal.

decimal : Parser Float

decimal =

chompDecimal

|> P.mapChompedString (\s () -> String.toFloat s |> Maybe.withDefault 0)

|> lexeme

So in this specific case, it feels to me that satisfying the type system actually gives no value (it’s extra work for zero benefit)

This is what I’d prefer to write for clarity. But even then I’m forced to represent that same branch that’ll actually never trigger.

decimal : Parser Float

decimal =

chompDecimal

|> P.getChompedString

|> P.andThen

(\s ->

case String.toFloat s of

Just n ->

P.succeed n

Nothing ->

P.problem "impossible"

)

Any thoughts?

via Elm - Latest posts by @dirkbj Dirk Johnson on Wed, 16 Aug 2023 20:32:40 GMT

This is awesome. Thanks for the explanation and sharing the code. ![]()

via Planet Lisp by on Wed, 16 Aug 2023 12:36:00 GMT

Hello

just a quick summer update about some documentation cleanup and some checks on debugging HEΛP.

Have a look at the (small) changes and keep sending feedback.

via Elm - Latest posts by @dwayne Dwayne Crooks on Wed, 16 Aug 2023 09:23:00 GMT

Learn about useful chompers that can help you write better parsers.

via Elm - Latest posts by @jfmengels Jeroen Engels on Mon, 14 Aug 2023 16:04:04 GMT

Hi everyone ![]()

New Elm Radio episode, in which we discuss how to avoid unused Elm code, why it matters, and what leads to unused code in the first place.

Hope you enjoy it!

via Planet Lisp by on Mon, 14 Aug 2023 00:00:00 GMT

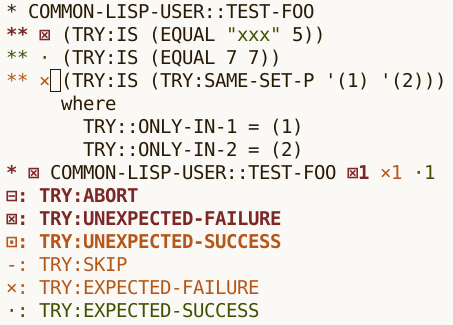

Try, my test anti-framework, has just got light Emacs integration. Consider the following test:

(deftest test-foo ()

(is (equal "xxx" 5))

(is (equal 7 7))

(with-failure-expected (t)

(is (same-set-p '(1) '(2)))))The test can be run from Lisp with (test-foo) (interactive

debugging) or (try 'test-foo) (non-interactive), but now there is

a third option: run it from Emacs and get a couple of conveniences

in return. In particular, with M-x mgl-try then entering

test-foo, a new buffer pops up with the test output, which is

font-locked based on the type of the outcome. The buffer also has

outline minor mode, which matches the hierarchical structure of the

output.

The buffer's major mode is

Lisp, so

The buffer's major mode is

Lisp, so M-. and all the usual key bindings work. In additition,

a couple of keys bound to navigation commands are available. See the

documentation

for the details. Note that Quicklisp has an older version of Try

that does not have Emacs integration, so you'll need to use

https://github.com/melisgl/try

until the next Quicklisp release.

via Elm - Latest posts by @solificati on Fri, 11 Aug 2023 17:50:50 GMT

Or you can use strict versions for reproducibility and use dependabot for bumping dependencies. That way you won’t get random CI errors because someone published new package. All of deps updates are in separate PRs, so every error is minimal.

via Planet Lisp by on Sat, 05 Aug 2023 18:46:00 GMT

Many years ago I was under the delusion that if Lisp were more “normal looking” it would be adopted more readily. I thought that maybe inferring the block structure from the indentation (the “off-sides rule”) would make Lisp easier to read. It does, sort of. It seems to make smaller functions easier to read, but it seems to make it harder to read large functions — it's too easy to forget how far you are indented if there is a lot of vertical distance.

I was feeling pretty good about this idea until I tried to write a macro. A macro’s implementation function has block structure, but so does the macro’s replacement text. It becomes ambiguous whether the indentation is indicating block boundaries in the macro body or in it’s expansion.

A decent macro needs a templating system. Lisp has backquote (aka quasiquote). But notice that unquoting comes in both a splicing and non-splicing form. A macro that used the off-sides rule would need templating that also had indenting and non-indenting unquoting forms. Trying to figure out the right combination of unquoting would be a nightmare.

The off-sides rule doesn’t work for macros that have non-standard

indentation. Consider if you wanted to write a macro similar

to unwind-protect or try…finally.

Or if you want to have a macro that expands into just

the finally clause.

It became clear to me that there were going to be no simple rules. It would be hard to design, hard to understand, and hard to use. Even if you find parenthesis annoying, they are relatively simple to understand and simple to use, even in complicated situations. This isn’t to say that you couldn’t cobble together a macro system that used the off-sides rule, it would just be much more complicated and klunkier than Lisp’s.